Note

Go to the end to download the full example code

Polyfit#

This tutorial shows a flexible inversion with an own forward calculation that includes an own jacobian. We start with fitting a polynomial of degree \(P\)

to given data \(y\). The unknown model is the coefficient vector \({\bf m}=[p_0,\ldots,p_P]\). The vectorized function for a vector \({\bf x}=[x_1,\ldots,x_N]^T\) can be written as matrix-vector product

We set up the modelling operator, i.e. to return \({\bf f}({\bf x})\) for given \(p_i\), as a class derived from the modelling base class. The latter holds the main mimic of generating Jacobian, gradients by brute force. The only function to overwrite is cw{response()}.

Python is a very flexible language for programming and scripting and has many packages for numerical computing and graphical visualization. For this reason, we built Python bindings and compiled the library pygimli. As a main advantage, all classes can be used and derived. This makes the use of GIMLi very easy for non-programmers. All existing modelling classes can be used, but it is also easy to create new modelling classes.

We exemplify this by the preceding example.

First, the library must be imported.

To avoid name clashes with other libraries we suggest to import pygimli and alias it to an easy name (as usually done for numpy or matplotlib), e.g. by

import numpy as np

import matplotlib.pyplot as plt

import pygimli as pg

The modelling class is derived from ModellingBase, a constructor is defined and the response function is defined. Due to the linearity of the problem we store the matrix \({\bf A}\), which is also the Jacobian matrix and use it for the forward calculation. A second function is just added as reference. We overwrite the method createJacobian as we know it but do nothing in the actual computation. If \({\bf J}\) depends on \({\bf m}\) this function must be filled.

class FunctionModelling(pg.Modelling):

"""Forward operator for polynomial interpolation."""

def __init__(self, nc, xvec, verbose=False):

super().__init__(verbose=verbose)

self.x_ = xvec

self.nc_ = nc

nx = len(xvec)

self.regionManager().setParameterCount(nc)

self.jacobian().resize(nx, nc)

for i in range(self.nc_):

self.jacobian().setCol(i, pg.math.pow(self.x_, i))

def response(self, model):

"""Return forward response by multiplying with kernel matrix."""

return self.jacobian() * model

def responseDirect(self, model):

"""Forward response step by step."""

y = pg.Vector(len(self.x_), model[0])

for i in range(1, self.nc_):

y += pg.math.pow(self.x_, i) * model[i]

return y

def createJacobian(self, model):

"""Do nothing (avoid default brute-force Jacobian)."""

pass # if J depends on the model you should work here

def createStartModel(self, data):

"""Create some starting model in the order of the data."""

return pg.Vector(self.nc_, np.mean(data)/self.nc_/3)

Let us create some synthetic data for some x values

Note the difference between error and noise. The error model is a scalar or a vector containing the standard deviations of the noise, which is an unknown realization of it. Errors are sometimes derived by stacking or estimated by using a relative and an absolute error contribution.

We now start by setting up the modelling operator, and inversion and run it.

fop = FunctionModelling(3, x)

# initialize inversion with data and forward operator and set options

inv = pg.frameworks.MarquardtInversion(fop=fop)

# We set model transformation to linear to allow for negative values

# (by default model parameters are expected to be positive!)

inv.modelTrans = pg.trans.Trans()

the problem is well-posed and does not need any regularization (lam=0) actual inversion run yielding coefficient model

fop: <__main__.FunctionModelling object at 0x7f7b6982d940>

Data transformation: <pygimli.core._pygimli_.RTrans object at 0x7f7b9be89960>

Model transformation: <pygimli.core._pygimli_.RTrans object at 0x7f7b6982c5e0>

min/max (data): 0.75/7.31

min/max (error): 2.16%/46.98%

min/max (start model): 0.53/0.53

--------------------------------------------------------------------------------

inv.iter 0 ... chi² = 2954.75

--------------------------------------------------------------------------------

inv.iter 1 ... chi² = 0.95 (dPhi = 99.97%) lam: 0.0

--------------------------------------------------------------------------------

inv.iter 2 ... chi² = 0.95 (dPhi = -0.00%) lam: 0.0

--------------------------------------------------------------------------------

inv.iter 3 ... chi² = 0.95 (dPhi = 0.00%) lam: 0.0

################################################################################

# Abort criterion reached: dPhi = 0.0 (< 0.5%) #

################################################################################

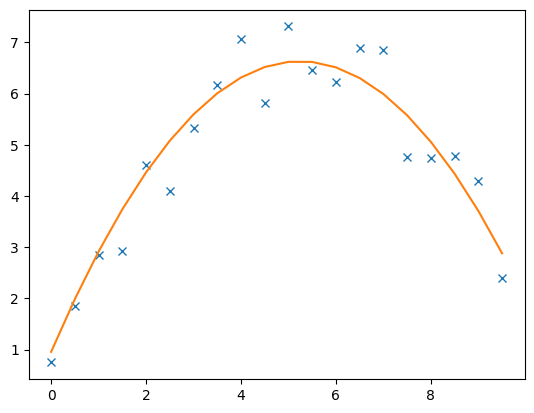

The data and model response are plotted by

The model contains the inverted coefficients

print(coeff)

3 [0.9560268156985832, 2.167015593973964, -0.2067697154645879]

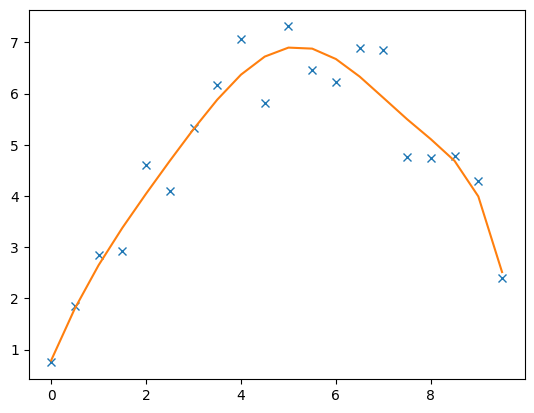

Of course the model can also be fit by higher or lower polynomials

fop: <__main__.FunctionModelling object at 0x7f7b6a1067f0>

Data transformation: <pygimli.core._pygimli_.RTrans object at 0x7f7b9be8a080>

Model transformation: <pygimli.core._pygimli_.RTrans object at 0x7f7b6982f9c0>

min/max (data): 0.75/7.31

min/max (error): 6.84%/66.81%

min/max (start model): 0.2/0.2

--------------------------------------------------------------------------------

inv.iter 0 ... chi² = 903726955864.56

--------------------------------------------------------------------------------

inv.iter 1 ... chi² = 58130191.53 (dPhi = 99.99%) lam: 0.0

--------------------------------------------------------------------------------

inv.iter 2 ... chi² = 13.66 (dPhi = 100.00%) lam: 0.0

--------------------------------------------------------------------------------

inv.iter 3 ... chi² = 0.97 (dPhi = 92.87%) lam: 0.0

--------------------------------------------------------------------------------

inv.iter 4 ... chi² = 0.97 (dPhi = 0.49%) lam: 0.0

################################################################################

# Abort criterion reached: dPhi = 0.49 (< 0.5%) #

################################################################################

8 [0.7884063680595086, 2.224665913575399, -0.387348400414236, -0.022287313612958548, 0.062243638936377105, -0.018012613843638392, 0.001964002287529287, -7.478618057832505e-05]

Note that the function tries to fit the noise which is also expressed in a chi-square value below 1, whereas the previous one was above 1. For small data vectors it is hard to reach exactly 1 (data fitted within noise). The chi-square value can be returned from the inversion object just like the absolute and relative RMS (root-mean-square) misfits.

print(inv.chi2(), inv.absrms(), inv.relrms())

0.9693293439470893 0.49227262364138463 9.50253013960583

As there is often misunderstanding among the terms, we are giving some background here. The root-mean-square of a vector is, as the name says, the root of the mean squared elements (N being the length of the vector)

We consider the misfit between data vector d and the forward response f(m). The simplest (absolute) measure is therefore called absolute RMS (ARMS):

which can also be computed by simple numpy functions

0.49227262364138463

In case of a large magnitude range (e.g. TEM voltages) one uses a logarithmic view (and axis) to look at the data values. Often the relative errors (absolute log errors) make more sense. For the relative RMS, the misfit values are divided by the data points itself:

9.50253013960583

9.329510387864607

So the absolut logarithmic misfit is close to the relative misfit. In inversion, neither of the quantities is minimized. Instead, we use the error to compute an error-weighted misfit (ERMS), sometimes also referred to as inversion RMS (to recognize since it is without data unit or %).

It represents the standard deviation of the error-weighted misfit.

weighted_misfit = (y - inv.response) / error

print(np.sqrt(np.mean((weighted_misfit**2))))

0.9845452472827693

For historical statistical reasons, instead of the standard deviation the variance is used and referred to as chi square.

whereas \(\Phi_d\) is the data objective function minimized in inversion.

print(np.mean((weighted_misfit**2)))

0.9693293439470893

The whole history of the chi-square fit can be looked at by

print(inv.chi2History)

[903726955864.5553, 58130191.5321299, 13.664081248774073, 0.9740662574947402, 0.9693293439470893]